The days of a 18GB SCSI drive being the standard audio data storage currency are long gone, and we are now blessed with an embarrassment of available storage types and configurations: myriad options for SSDs, Thunderbolt connections, USB protocols and RAID levels all cover the land with a Thick Mist of Obfuscation.

And whilst manufacturers bandy impressive performance figures about, they don’t always correlate with real-world performance very well - especially in regards how they perform for Digital Audio Workstation workloads. Likewise many disk benchmark applications - such as the widely-used Blackmagic Disk Speed Test app - focus on video workloads instead of audio ones.

Blackmagic Disk Speed Test

But why are these so different? Why wouldn’t a video-focussed test be equally applicable to audio usage?

The answer is due to the pattern of data access performed on the drive: video projects typically access only a small number of files at any time - often just a single file, but more where a crossfade or insertion take place, requiring layering of different files of footage simultaneously. Whilst these files can have fabulously high bit rates - e.g. 1700Mbps for a 4K ProRes video - only a typical maximum of three or four such files will be played simultaneously.

Audio files are much much smaller - 2.3Mbps for a 48kHz 24-bit stereo file - but they are played simultaneously on a massive scale: with a sample-heavy playback environment, it could easily be:

50 instrument tracks

Each playing 2 overlapping notes

Each with 4 mixed microphone positions

Multiply these together to get 400 simultaneous files that need to be read from disk. With spinning hard drives, this was really hard work for the drive: it would need to read a small segment from each individual sample file in turn - before it then starts and reads the next small segment from each sample file… and so on. And each time you’re switching from one file to the next, there’s no guarantee that the files are sitting next to each other on the disk platters - so the drive has to reposition the heads over the spinning disk, wait for the right bit of data to fly under the head, and then move on to the next file.

To be honest, it’s a wonder it ever worked at all:

So where video files could largely be written in a long contiguous block on a disk - and thus require sequential data access, audio projects will typically require random access for a multitrack workflow.

Solid state drives - SSDs - are much better suited to this task: as data is all stored electronically across a matrix of memory cells, no physical mechanisms hamper access to disparate areas of the disk, so the SSD can achieve much higher random access data rates, required for audio applications.

So, armed with a stopwatch and an exceedingly large cup of coffee, we looked to demistify the landscape and gather some useful data. Given that it’s challenging to reliably measure the number of simultaneously-playing voices (look at the Audio Track Playback Performance section of a previous blog article to see how low the usage is), our tests comprised the following actions in Logic Pro X 10.5.1, running under macOS 10.15.5 Catalina:

How long it takes to perform the initial offline export of 500 tracks - as per the Off-Line Track Export Performance section of a previous blog article: we selected a low-CPU project with no audio track playback, so this almost-entirely tests the write speed for the disk;

How long the second portion of the offline export takes: this rewrites all the audio files, normalising them and reducing the bit depth from 32-bit floating point to 24-bit. Consequently this is a mix of offline simultaneous disk read and write operations;

How long it takes to open the Spitfire BBC Symphony Orchestra full template: this template file (available from here) contains over 400 instances of the BBCSO plug-in, and the plug-in window handily has a little nubbin to show when sample loading has completed;

How long it takes to open a typical Spitfire BBC Symphony Orchestra project: this project included 67 BBCSO plug-ins, and was the one used in our shootout between the 2019 Mac Pro, 2018 Mac Mini and 2013 Mac Pro.

External SSD Tests

Our first suite of tests examined the performance of a SATA SSD housed in a variety of different external drive enclosures. We used a Samsung 860 EVO 4TB SSD, which features a 6Gbps SATA interface; consequently - even if it’s housed in an enclosure which can connect at 40Gbps to the host, the data transfer will be limited by the drive’s 6Gbps bottleneck.

The enclosures we tested were:

| OWC ThunderBay 8 | Thunderbolt 3 | 40Gbps |

| OWC ThunderBay 4 mini | Thunderbolt 2 | 20Gbps |

| OWC Mercury Elite Pro Mini | USB 3.1 Gen 2 | 10Gbps |

| Startech S251BPU313 | USB 3.1 Gen 2 | 10Gbps |

| Startech S251SMU33EP | USB Gen 3.0 | 5Gbps |

All enclosures were connected to a 2019 Mac Pro.

Here’s how the different tests performed in regards the Blackmagic Disk Speed Test:

Blackmagic Disk Speed Test on different external SSD enclosures

There are no major surprises here: all tests ran roughly as expected - with the exception of the Startech USB3.1 enclosure. Despite being theoretically as fast as the OWC USB 3.1 enclosure, the Startech USB 3.1 enclosure performed over 10% slower than its cousin.

The connection speed of USB 3.0 is slightly slower than the SATA connection of the SSD under test - and consequently we should not be surprised that it performed slightly worse.

However (aside from the Startech USB 3.1 enclosure), the others all performed equally well - suggesting that the 6Gbps SATA connection was the limiting factor here.

There was roughly 18% difference between the fastest and slowest read speeds tested.

Looking at the performance for the offline export operation:

Offline track export times on different external SSD enclosures

And for loading the two project files:

Project loading times on different external SSD enclosures

As it’s difficult to interpret these figures as they are, let’s invert and normalise them, so the fastest results produce the tallest columns:

Inverse offline track export times on different external SSD enclosures

Inverse project loading times on different external SSD enclosures

With the offline export tests, a similar variation between fastest and slowest results were seen as the Blackmagic Disk Speed Test app showed, with the Thunderbolt connections generally outpacing the USB connections.

In the project loading tests, only a 12% variation between fastest and slowest was seen - with the slowest connection - USB3.0 - surprisingly providing the fastest result. As yet, we do not know why this should be…

Internal vs External SSDs

We considered two internal storage options here - the built-in SSD of the 2019 Mac Pro (4TB option), and a Samsung 2TB 970 EVO Plus NVMe SSD hosted on a Sonnet M.2 4x4 PCIe card.

Both options have a fast connection to the Mac’s internal PCIe buss, running at 32Gbps - much faster than the SATA port from the previous tests.

Looking at the Blackmagic Disk Speed Test results, alongside the external SSD tests:

Blackmagic Disk Speed Test performance for internal SSDs vs external SSDs

There is a major improvement shown here, with the fastest data rates being over 5x quicker than even the fastest external SSD option provided, and with only a small variation between the built-in SSD and the add-on NVMe SSD.

Looking at the offline export and project loading tests:

Offline track export times for internal SSDs vs external SSDs

Project loading times for internal SSDs vs external SSDs

And their inverted, normalised representations:

Inverse offline track export times for internal SSDs vs external SSDs

Inverse project loading times for internal SSDs vs external SSDs

Whilst the internal drives still show a notable improvement over the external ones, the change is much more muted than the Blackmagic results would suggest:

The offline export performance is 2 - 2.5x the speed of the external drives;

The project load performance is - at best - 51% faster than the external drives.

Whilst a 51% improvement in performance is not to be sniffed at, the performance expectation should definitely not be set by the benchmarks provided by the Blackmagic Disk Speed Test app, or similar.

SSDs vs HDDs

This is going to be unfair, but let’s chart a spinning hard drive against these SSDs. The drive tested here was a Seagate 4TB 7200rpm SATA drive, housed in the OWC ThunderBay 8 enclosure.

Blackmagic Disk Speed Test of SSDs vs spinning hard drive

Blackmagic Disk Speed Test here shows the hard drive has only 6% of the performance of the fastest SSD option.

Looking at the DAW performance, and their normalised-inverted counterparts:

Offline track export times of SSDs vs spinning hard drive

Project loading times of SSDs vs spinning hard drive

Inverse offline track export times of SSDs vs spinning hard drive

Inverse project loading times of SSDs vs spinning hard drive

In the export tests, the hard drive had performance of around 13% of the NVMe drive, and in the project loading test, performance was around 21% - still unimpressive compared with modern solid-state options, but not quite as dire as the Blackmagic Disk Speed Test app purported.

RAID 0 Performance

Much is made of the benefits of using RAID 0 - striping data across multiple disks - to improve the performance of storage systems. Whilst the theory of staggering access times for multiple drives drives in parallel so as to increase the overall throughput is reasonable, in practice RAID 0 also has drawbacks:

The failure of any disk in the array will result in the effective loss of data for the entire array. The failure probability for the array is the sum of all the disks in the array - e.g. a 4-disk RAID 0 array is 4 x more likely to fail than a single disk;

All disks in the array must be of equal size - so if you built an array out of 2TB drives, and then wanted to expand it later, you can still only use 2TB drives for the expansion (unless you want to throw away the original drives).

For this reason, RAID 0 is most appropriately used as a temporary ‘scratch’ drive for work-in-progress files for a project. These files should be backed up frequently, and archived onto long-term protected storage once the project has been completed: RAID 0 is know as ‘Scary RAID’ for good reason.

One of the parameters of a striped RAID array is the ‘chunk size’ - that is, how much of a file is stored as a chunk on one disk before moving onto the next disk in the array to store the following chunk. Performance can be very dependent on the chunk size matching the demands of specific applications, and with systems having specific, quantifiable applications running - such as a single video editor application, or a single database application - tuning the chunk size could yield measurable benefits.

However, a DAW does not necessarily perform uniformly: whilst the DAW itself could match a specific chunk size for audio track recording and playback, every instrument plug-in which streams a sample library could be optimised for a different chunk size - and the OS itself could ideally use yet another chunk size for copying or manipulating files.

For these tests, we assembled a 2-disk and 4-disk RAID 0 volume using NVMe drives under AppleRAID, with the default 32kB chunk size (for want of any uniformity of application).

Here’s what Blackmagic Disk Speed Test found:

Blackmagic Disk Speed Test for NVMe RAID 0 arrays

This shows a useful rise in performance - up to 2.5x - for additional drives in RAID 0 configuration, above the already-impressive performance of the single NVMe drive. Let’s see how that bears fruit in the DAW context:

Offline track export times for NVMe RAID 0 arrays

Project loading times for NVMe RAID 0 arrays

The results here do not at all reflect the improvements that Blackmagic Disk Speed Test would suggest - indeed, apart from second stage of the offline track export, the results are remarkably flat across the board. The second stage of the offline track export does however gain a 25% improvement, which is contrary to all the other measurements here.

What does this mean? It may be that with the currently-fastest-available storage, a single drive is able to keep up with the massively-parallel DAW-style storage demands from the processor as fast as the processor is able to issue them - and that, even with the fastest Mac currently available, RAID 0 arrays offer no benefit for such workloads. The Blackmagic Disk Speed Test results suggest otherwise for video workloads - but the same requirements do not apply to DAW tasks.

Alternatively it may be due to a mismatch between the individual data transfer sizes from Logic (and the BBCSO plug-in) and the RAID chunk size: we may be seeing a good match between the second stage of the offline track export (hence its improving performance as the RAID array increases) - but a poor match in the other tests.

Looking at the same question with other storage options - SSDs in the OWC Thunderbolt 3 enclosure, and spinning hard drives in the OWC Thunderbolt 3 enclosure - here’s what we see:

Blackmagic Disk Speed Test for SSD and HDD RAID 0 arrays

Blackmagic Disk Speed Test suggests that the 2-disk RAID 0 SATA SSD array offers around 2x the performance of a single SSD, whereas a 4-disk RAID 0 hard drive array offers around 3-4x the performance of a single drive.

Looking at the DAW tests and their normalised, inverted counterparts:

Offline track export times for SSD and HDD RAID 0 arrays

Project loading times for SSD and HDD RAID 0 arrays

Inverse offline track export times for SSD and HDD RAID 0 arrays

Inverse project loading times for SSD and HDD RAID 0 arrays

As with the NVMe RAID 0 tests, the second stage of the offline track export showed the biggest improvements here, tending towards the performance improvements that Blackmagic Disk Speed Test was exhibiting - but otherwise, although some improvement could be seen for striping multiple disks together, the performance increase was very insubstantial compared with the benchmarks.

SoftRAID Performance

There are very few software RAID options for macOS - and the chances are if you’re RAIDing and you’re not using AppleRAID, you’re using SoftRAID instead.

The popular perception is that AppleRAID has long languished, and that if you’re serious about RAID, you should be using SoftRAID. Certainly SoftRAID offers features that AppleRAID does not - including the ability to run a RAID 5 volume: one that combines striped disks with a data protection layer, to prevent the loss of data if a single disk in the array fails.

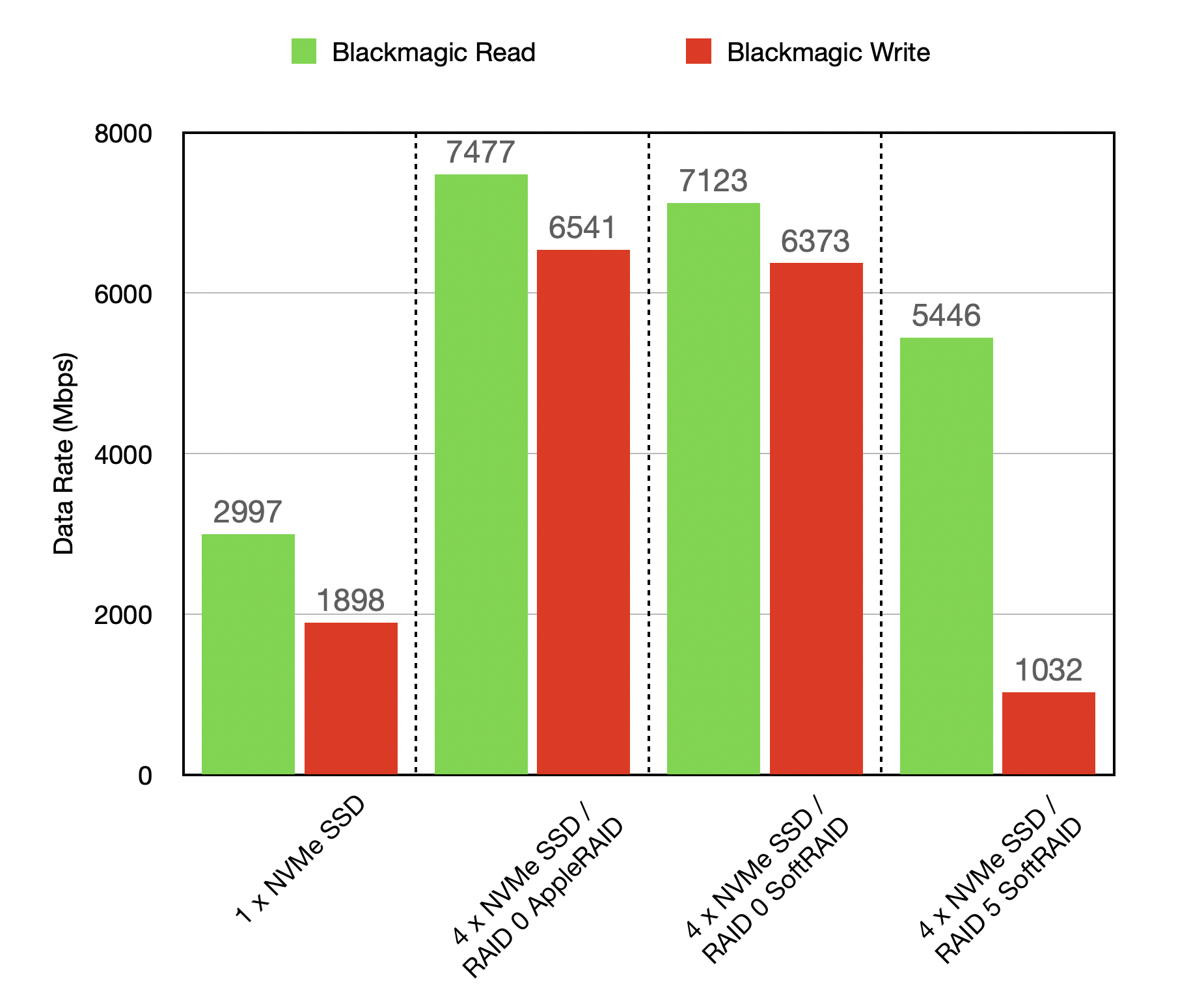

Here are the Blackmagic Disk Speed Test results for SoftRAID RAID 0 and RAID 5 on a 4-disk NVMe array, compared with a single drive and AppleRAID:

Blackmagic Disk Speed Test for AppleRAID and SoftRAID arrays

The results here are disappointing - the RAID 0 performance is slightly worse than the AppleRAID performance, and the RAID 5 performance - especially in terms of write speed - is worse yet.

But, as we have seen, this does not always tell the whole story:

Offline track export times for AppleRAID and SoftRAID arrays

Project load times for AppleRAID and SoftRAID arrays

Inverse offline track export times for AppleRAID and SoftRAID arrays

Inverse project loading times for AppleRAID and SoftRAID arrays

These show a very mixed bag of results:

SoftRAID RAID 5 performed worst in the offline export tests - presumably due to the additional drive writes which are required to maintain the data protection layer;

But SoftRAID RAID 5 performed equally as well as SoftRAID RAID 0 - and in excess of either the single drive or AppleRAID - on the project loading tests;

SoftRAID RAID 0 performed slightly worse than AppleRAID for the offline export tests.

This underlines the difficulty in setting up a RAID for a mixed-application environment: although it might be possible to tune the RAID configuration for optimised performance in one respect, doing so may well reduce the performance in other respects.

Disk Format - HFS+ vs APFS

Until recently, the standard disk filesystem for Macs was HFS+. This was introduced in 1998 with the release of Mac OS 8.1, and was largely based on its forerunner, HFS, which was first introduced back in 1985.

Apple’s new filesystem - APFS - was first made public on the Mac in macOS 10.13 High Sierra, and has seen steadily increased adoption ever since: it is now the only choice of bootable filesystem for Macs running macOS 10.14 Mojave onwards.

APFS introduced a whole raft of filesystem modernisations compared with its ancient predecessor, including:

Efficient space utilisation and shared data blocks for cloned files;

Increased protection against filesystem corruption;

Snapshots;

Shared storage for multiple volumes;

Multi-threaded data structures - and more.

The filesystem causes increased fragmentation of files and metadata across the disk, as part of the design to support the new facilities that APFS offers. SSDs can handle this easily, but it causes massive sluggishness when deployed on spinning hard drives - see this excellent article from Mike Bombich, author of Carbon Copy Cloner for more analysis.

On SSDs, the benefits that APFS offers are attractive, now that it can be considered stable and trustworthy - but do such benefits come with a performance penalty compared with ‘good’ old HFS+?

Our tests so far have been run entirely on HFS+ disks - with the exception of the built-in SSD tests: as our test machine was running macOS 10.15 Catalina, the internal system drive was running APFS - apparently with no adverse performance effects.

We tested our 4-drive NVMe RAID 0 array running HFS+ against the same setup, but running APFS:

Blackmagic Disk Speed Test for HFS+ vs APFS disks

In this test, quite the contrary to suffering any ill-effects, APFS performs a little better than HFS+.

Offline track export times for HFS+ vs APFS disks

Project load times for HFS+ vs APFS disks

And the DAW-specific tests showed almost no difference in performance for moving to APFS - a minor improvement in one test, and a minor degradation in another test. Certainly nothing to dissuade one from adopting APFS whole-heartedly.

Conclusions

Whilst the best system performance comes at a price, for DAW applications there are some good rules of thumb that can be drawn from these tests:

SSDs are faster than spinning hard drives (duhhh…) - but spinning hard drives are much cheaper and have much higher capacities. Deploy each of them appropriately where it makes most sense;

NVMe SSDs are faster than SATA SSDs - but require an internal PCIe connection to yield the best performance. NVMe SSDs also currently have a lower maximum capacity and are more expensive than their SATA counterparts;

If NVMe performance is not practically achievable, then SATA performance is not toooo far behind, and should definitely not be considered a poor relation;

Many SATA-based interfaces - Thunderbolt 3, Thunderbolt 2, USB 3.1, USB 3.0 - have speeds which are not too dissimilar from each other for a single drive. But note that once multiple drives are to be connected and accessed, multi-drive Thunderbolt enclosures will offer a greater throughput and more robust connection than USB;

RAID is often not consistently worthwhile for DAW applications - and its management overhead can be more trouble than it’s worth;

APFS offers no significant performance penalty on SSDs, and has worthwhile benefits. But APFS-formatted drives cannot be accessed on machines running macOS 10.12 or older.